Advanced Multi-Query RAG Techniques for Better Chatbot Experiences

generated using TEV1

As everyone knows, RAG provides a LLM with additional context for more relevant responses but there are all sorts of techniques to better improve the RAG process.

In this article, we look at RAG processes that you can use for various use cases to improve the quality and relevance of LLM responses. In particular, we will focus on multi-query as well as smart query configurations you can easily add into your RAG pipeline.

The Traditional RAG Process

The standard RAG process involves a single query and normally a centralized vector database which holds your documents or data. This data can be about your business, a PDF you are analysing, anything!

The first step is to convert your query into an embedding before using distance minimisation algorithms to compute the most local and hence closest matching embeddings within your vector database.

These are converted back into natural language to be added to a prompt to send to the LLM. The additional context about your business or PDF is inserted into a prompt to guide the LLM’s response.

This works if your vector database documents have great resemblance to the queries you intend to use on your LLM but your data might be a bit more unstructured.

In the case, of it being more unstructured, simple queries may lack the required information to accurately retrieve the relevant information from the vector database.

I think most people who develop RAG solutions will have ran into this issue where the information is definitely in the vector database but it seems the query is not yielding the results you would like.

You end up looking like this confused donkey below.

"RAG" - Confused Donkeh

Background on RAG

For a more detailed look at embeddings and how they work, check out this article.

Using Vector Embeddings Instead of Literal Strings

It’s not feasible or easy to measure the similarity of text using the actual characters hence the creation of the approach which involves vectorizing text into numerical arrays.

When we compare sentences for similarity, we are comparing the similarity of the numbers in each of their vectors but it’s effectively the same as trying to match based on their characters.

Numbers are just easier to manipulate.

Papers & Research Relating to Vector Embeddings:

For a more detailed understanding of embeddings refer to the following papers which are milestone papers in the field of NLP:

Word2Vec: Mikolov et al. (2013). "Efficient Estimation of Word Representations in Vector Space." This seminal paper introduced Word2Vec, a popular method for generating word embeddings.

BERT: Devlin et al. (2018). "BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding." BERT revolutionized NLP with its powerful contextualized word and sentence embeddings.

Literal String Matching = Literal Results

always something you can do = asycd

Solution — Multiple Queries on VectorDB

Multi-query RAG aims to fix some of the issues with single query RAG by performing multiple searches on vector index.

The degree of variety of queries and the number are key variables in determining the effectiveness of it. If your queries are topic related, then you might be providing your LLM with seemingly unrelated information.

On the other hand, query bundles that are too similar can lead to repeated bits of context which don’t increase the LLMs scope of understanding like intended.

It’s a fine line and a line that we might be able to help you navigate better.

Useful Multi-Query RAG Techniques

Keyword-Based Multi Query

To form your batch of keywords, you can use an algorithm or even another LLM to extract the keywords from a query.

For example, the query “what are the benefits of solar energy in warm climates”, you would extract the following keywords:

benefits

solar energy

warm climate

Performing separate vector searches in this manner will provide the LLM with data relating to each of the keywords from the vector database. This will lead to increased contextual awareness for the LLM but there are few things to note with this technique:

Token Usage — Since you are retrieving more documents, the tokens used as input to the LLM increase.

Keywords and phrases still might not yield relevant results for the query if keyword searches are too broad — It’s best to focus on key or relevant phrases instead of just single words.

Heavily dependent on the structure and contents of the vector database.

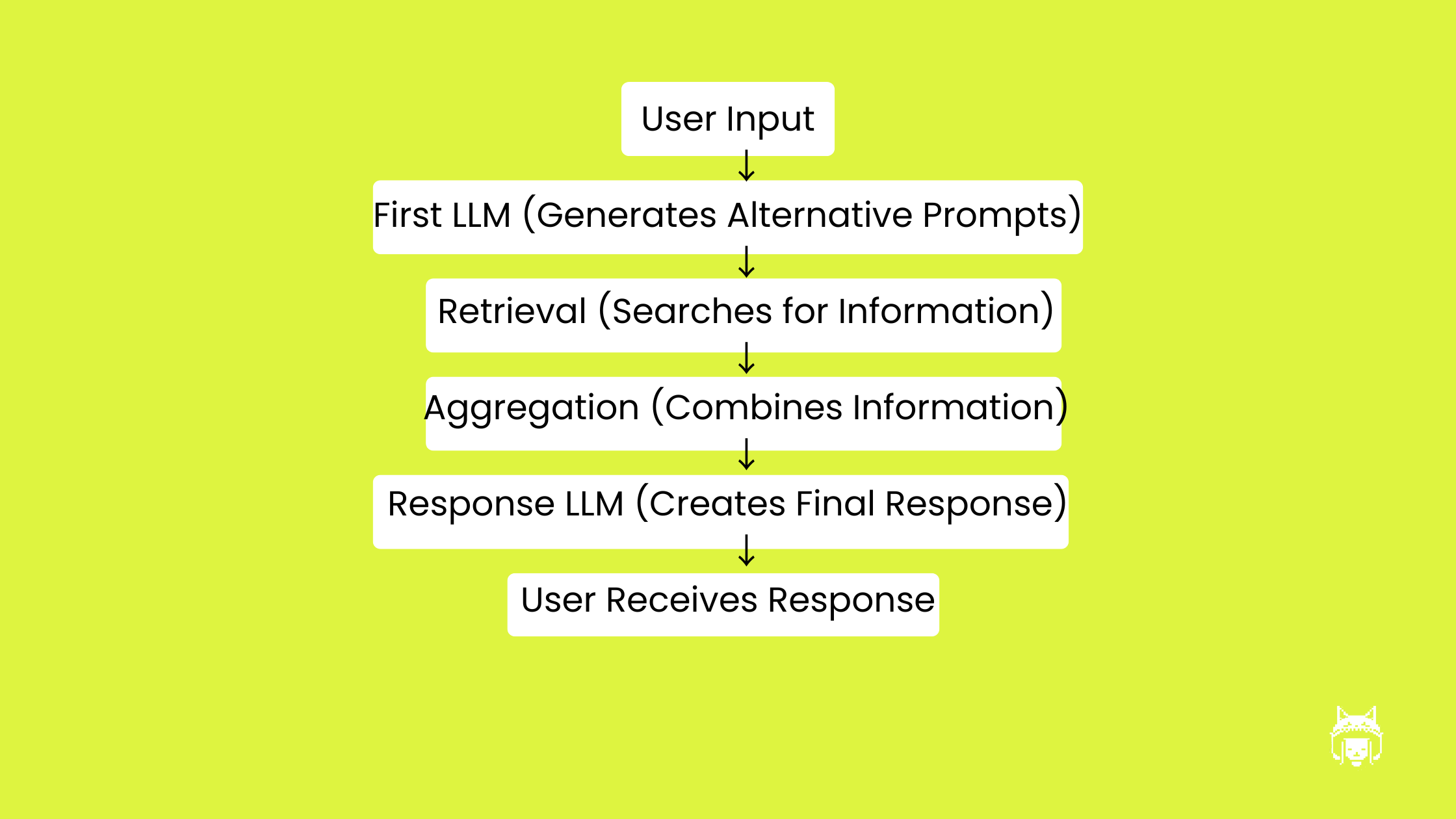

LLM Assisted Multi Query RAG

Below is an example function that will take the input of the original prompt and the desired number variations. The function will simply generate alternative prompts to be used later in a multi-query RAG process. Performing individual queries on a vector database using each of the prompts.

LLM Assisted RAG Flow Diagram

generate_similar_prompts function

You can play around with prompt and temperature to see how the variety of prompts affects relevance of retrieved documents.

While highly effective, the LLM-Assisted Multi Query RAG approach also has some limitations:

Token Intensive — Similar to Keyword RAG, generating multiple queries through an LLM can consume a significant number of tokens, which might be a consideration for projects with limited API usage.

Context Control — Since the initial query generation is handled by another LLM, you might experience less direct control over how the context is shaped and retrieved from the vector database.

To address these limitations, you can refine the system prompt to better guide the process of creating alternative queries, ensuring alignment with your data and objectives. By carefully crafting the instructions given to the LLM, you can mitigate the loss of control and steer the outputs to be highly relevant and contextually accurate.

Context-Guided Multi Query — Untested by Us

Arguably our most complex RAG idea and we have many!

Let’s say you are utilizing a local chatbot ideally with conversation history enabled, you could use the previous conversation as additional context for further queries.

Using a multi-llm approach similar to the ReAct process we covered in another article, you can create a pipeline which finds relevant potential queries based on your chat history.

For instance, let’s say you were talking to Asyra which is our AI assistant about writing an article about the benefits of a good nights sleep.

asyra ai voice assistant

The conversation might be drifting from topic to topic but there is main consensus of ideas to gather potential prompts from. You could ask an LLM to generate potential ‘alternative queries’ based on the chat history.

From there, you could perform the normal multi-query RAG process to find relevant details from the database which might contain research about sleep, psychology and more. That would lead to higher relevance in theory but how can we test it?